Surgeon Cognitive Dashboard — Case Study

Zero-headgear neuro-ergonomics for robotic surgery

For training: a sandbox that teaches why thresholds adapt → a dashboard that coaches in real time → metrics that prove learning happened.

Why I built this

Surgical Safety Technologies (SST) (Research Assistant / AI Annotator).

- Assisted on instance segmentation for tool detection/tracking

- Computed inter-rater reliability (κ/ICC) for analyst labels

- Reviewed ML-for-safety literature to support analyst operations

→ Lesson: labels drift , interfaces & alarm hygiene decide adoption .

UC Riverside (PhD project and dissertation).

- Test concurrent physical (5% vs 40% MVC ) × cognitive effort with pupillometry

- Domains: working/long-term memory + auditory/visual discrimination

- Theories: Resource Competition + Neural Gain (adaptive gain)

→ Mechanism: pupil-indexed LC–NE links arousal to performance.

Why this dashboard: combine deployable UX from SST with mechanistic LC–NE insight to deliver a training-first, no extra wearables cognitive monitor for robotic trainees.

Executive Summary

Surgery is a cognitive sport. When arousal is balanced, performance peaks; when it’s too low or too high, errors creep in. The OR is tightly constrained—so we avoid new body-worn hardware and lengthy calibration, and instead fuse existing robot telemetry with unobtrusive biosignals to deliver real-time coaching.

I built two tightly coupled tools:

- Training Lab: makes load/fatigue felt and lets instructors choose a threshold policy to match their pedagogy.

- Production Dashboard: runs those policies in real time using pupil + grip/tremor + HRV, tuned to prevent alarm fatigue and respect sterile-field constraints.

Accuracy is necessary, not sufficient. We optimize for operational outcomes—fewer high-load minutes, faster recovery, quicker proficiency—alongside standard model metrics.

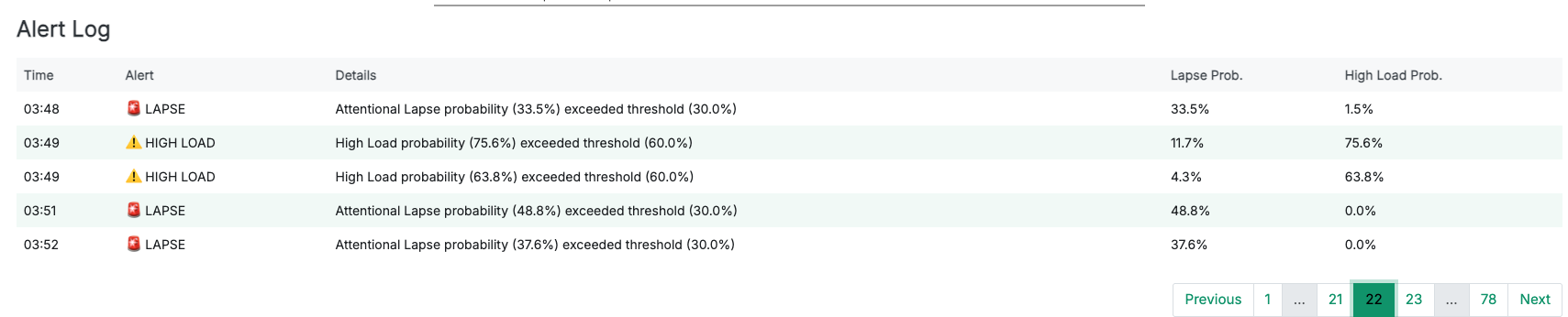

Deployment KPIs: Alert burden ≤ 0.6/min, High-Load min/hr ↓, Time-to-Recovery sec ↓, Acknowledgement rate ↑.

Objectives — what this actually delivers

- Clinical ergonomics, not gadgetry. Non-intrusive biosignals (pupil, HRV, grip/tremor) + robot telemetry → actionable state estimates (Normal / High-Load / Lapse).

- Training outcomes, not scoreboards. Reduce high-load minutes per hour, shorten time-to-recovery after microbreaks, and accelerate time-to-proficiency.

- Pedagogy built-in. Three threshold policies map to real teaching strategies: Adaptive Gain (arousal sweet-spot), Dual-Criterion (SDT) (false-alarm control + hysteresis), Time-on-Task (fatigue & microbreaks).

- Deployable now. Sterile-field-friendly, <60-second setup; sensible defaults, guardrails against alarm spam, and readable explanations.

Who benefits — and why

- Residents. See when trainees drift into low arousal or overload; get just-enough nudge (microbreaks, pacing, framing) without flooding them.

- Instructors. A common language for when to step in: high-load confirmed by TEPR↑ + HRV↓, lapse suggested by low TEPR + blink anomalies.

- Program directors / ops. Track high-load min/hr, recovery latency, and alarm burden across sessions to prove training impact and tune curricula.

Personalized coaching — how it adapts to the trainee

Idea. Each trainee has a different “inverted-U” width and bias. We infer a coaching profile from early sessions:

- Wide band: tolerates more challenge before overload → allow denser feedback; push complexity sooner.

- Narrow band: tips into overload quickly → favor microbreaks, chunk tasks, reduce concurrent demands.

Heuristics (evidence-informed, lightweight):

- Likely overload (right limb):

z(TEPR) ≥ +0.8ANDRMSSD ↓ ≥ 25–35%over 60–120 s → suggest microbreak or slow tool motions for 60–120 s. - Likely lapse (left side):

z(TEPR) ≤ −0.5OR blink bursts + stable HRV → prompt re-centering (brief cueing, checklist, or instructor query). - Stable but trending: slope(High-Load prob, 90 s) > 0 with rising grip CV → anticipatory nudge (“prepare break after this step”).

Not a diagnosis. This is training feedback, not medical inference. Requires individual calibration and instructor judgment.

If we want to surface the coaching logic UI-side, drop this explanatory microcopy under the Live Monitor:

Coaching logic (transparent): Alerts fire on evidence + physiology with hysteresis to prevent chatter. HL requires TEPR↑ or HRV↓; Lapse prefers low TEPR + blink anomalies. Policies then shape sensitivity (SDT), target the sweet-spot (Adaptive Gain), or relax with time (Fatigue).

Threshold Policies — how they work

One problem, three lenses. Each policy below answers: what changes, when to use it, and how it maps to the production dashboard defaults.

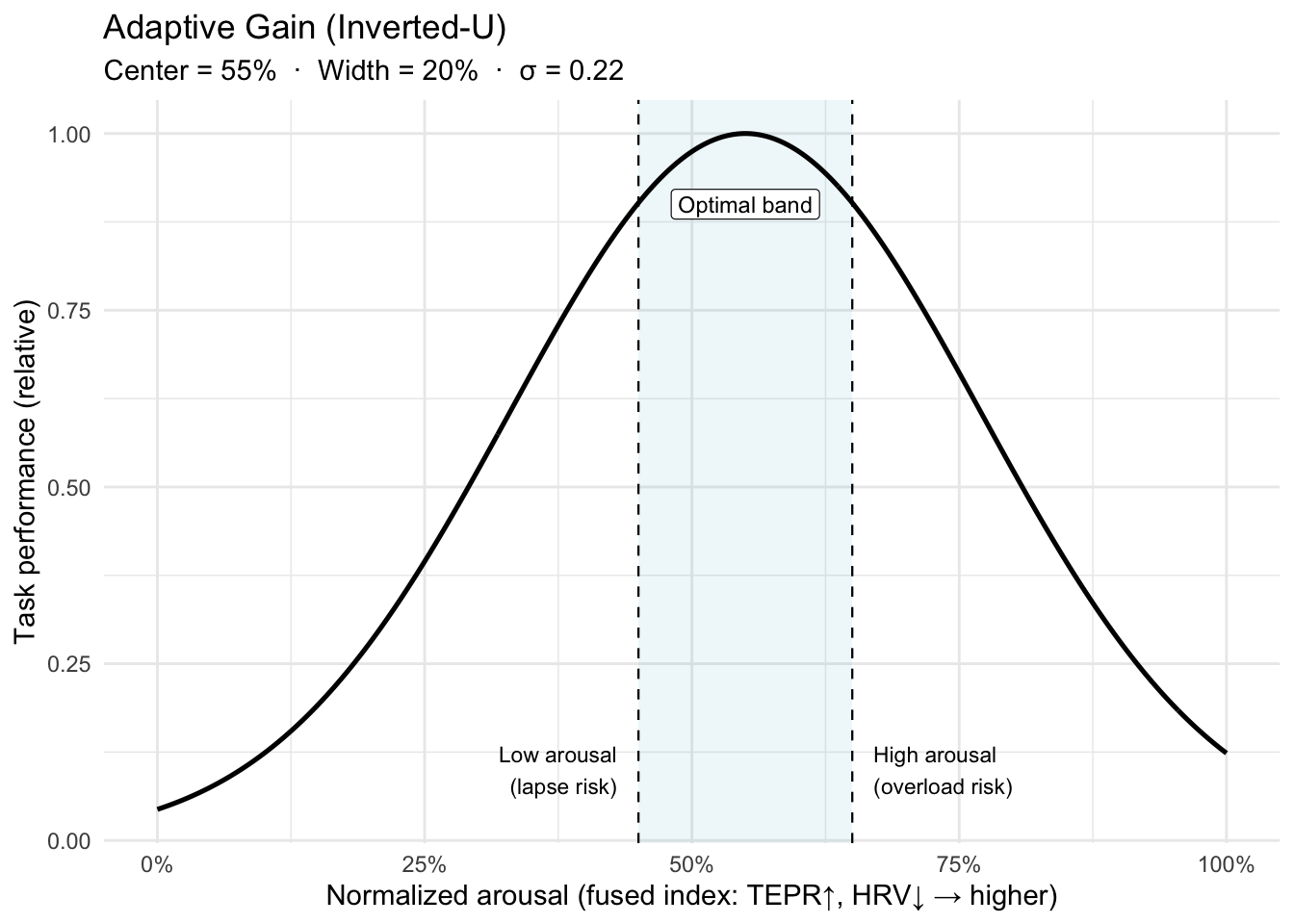

X-axis detail: Normalized arousal = fused TEPR + HRV index ∈ [0,1].

Maps to Dashboard. We fuse TEPR (↑) and HRV (↓) into a normalized arousal index ∈ [0,1]. Alerts fire when the index leaves the optimal band

[lo, hi].- Wider band → fewer alerts, gentler coaching.

- Narrower band → tighter coaching, higher nuisance risk.

- We add hysteresis (small margins) to prevent alert “chatter” at band edges.

Notes for accuracy.

- The inverted-U is task- and person-dependent: expertise, stakes, and fatigue shift the peak (band_center) and width (band_width). That’s why we calibrate per trainee.

- “Adaptive gain” is the mechanism (LC-NE modulating neural gain) that can yield this non-monotonic performance curve.

References. Yerkes–Dodson (1908); Aston-Jones & Cohen (2005, adaptive gain).

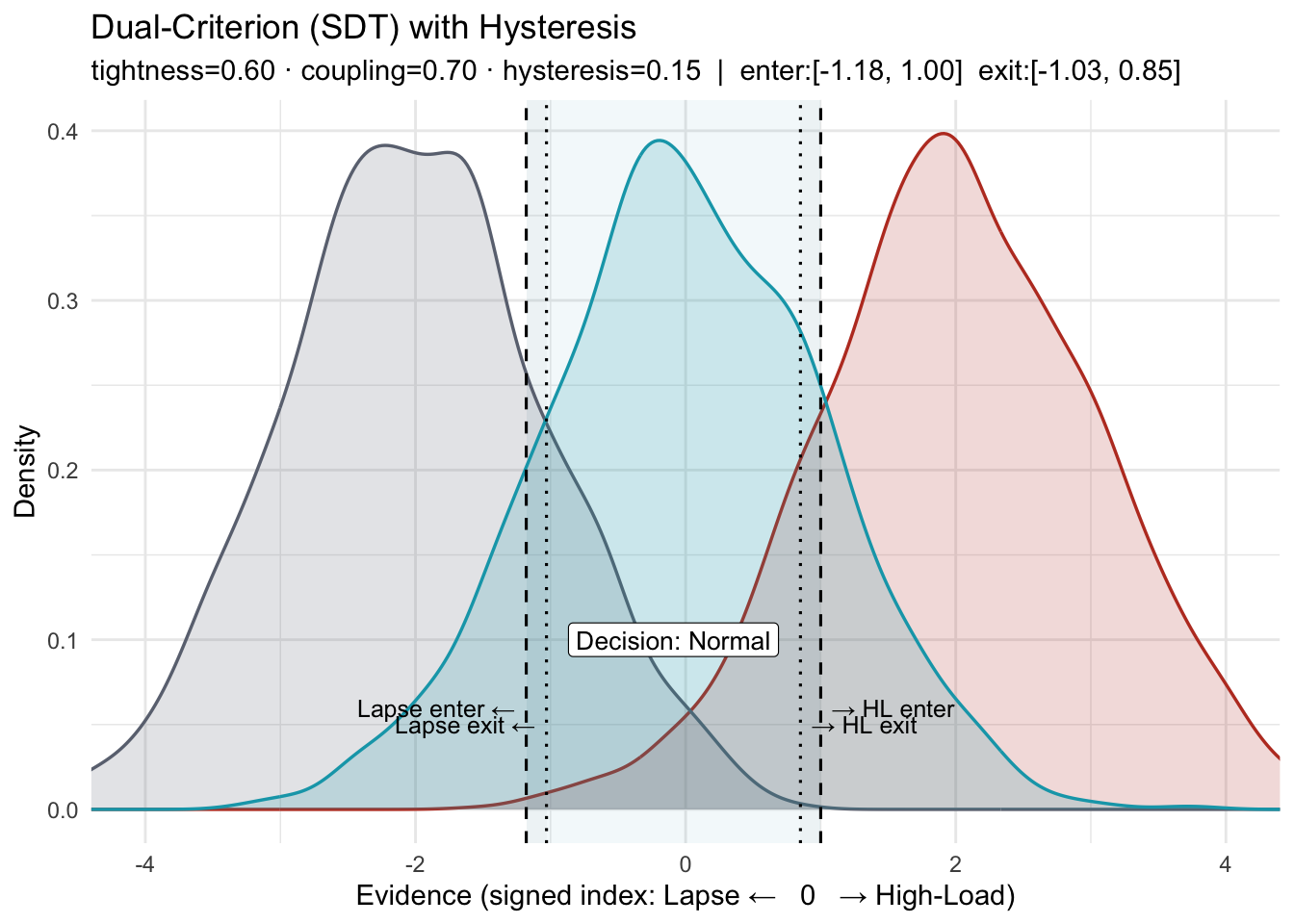

X-axis: a z-scored, signed evidence index from biosignals (e.g., TEPR↑, RMSSD↓). Higher → High-Load; lower → Lapse.

- What this shows. A three-state SDT layout (Lapse / Normal / High-Load) with two decision criteria and hysteresis (separate enter/exit thresholds).

criterion_tightnessnarrows or widens the Normal window by moving both criteria toward or away from 0.couplingcontrols symmetry: values < 1 move the Lapse boundary less than the High-Load boundary; values > 1 move it more.

- Why “Dual-Criterion,” not “Sensitivity.” In SDT, sensitivity is d′ (distribution separation). This policy adjusts decision criteria (bias), not d′.

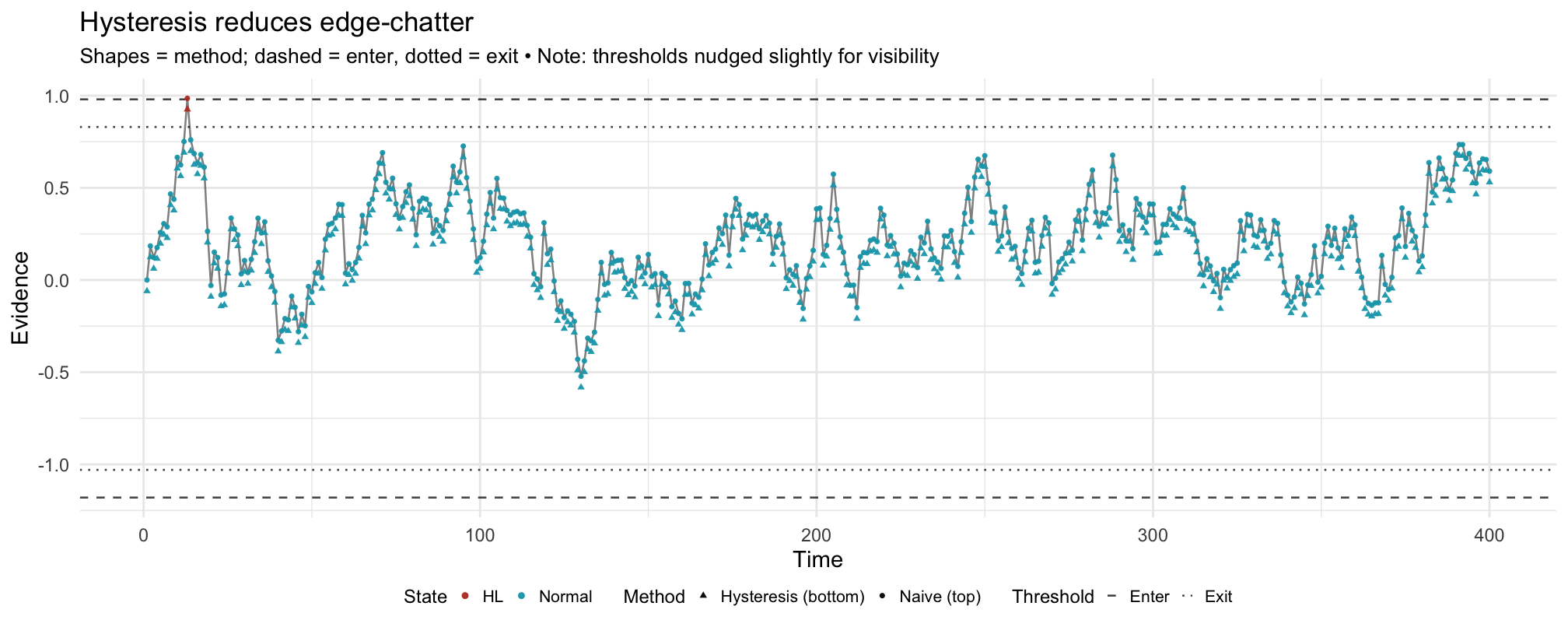

How the two figures relate: The first figure defines the boundaries (enter/exit on each side). The second applies the same boundaries—nudged slightly if needed so at least one crossing is visible—to a noisy signal, showing how hysteresis prevents flip-flops near a boundary.

- Hysteresis. Enter/exit thresholds (and/or consecutive-sample confirmation) require persistent evidence to change state—reducing nuisance alerts.

- Maps to Dashboard. The control sets the HL and Lapse thresholds together. Alerts still require evidence + physiology (e.g., HL requires TEPR↑ or HRV↓; Lapse prefers low TEPR + blink anomalies).

- References. Macmillan & Creelman (Signal Detection Theory); NASA-TLX background (Hart & Staveland).

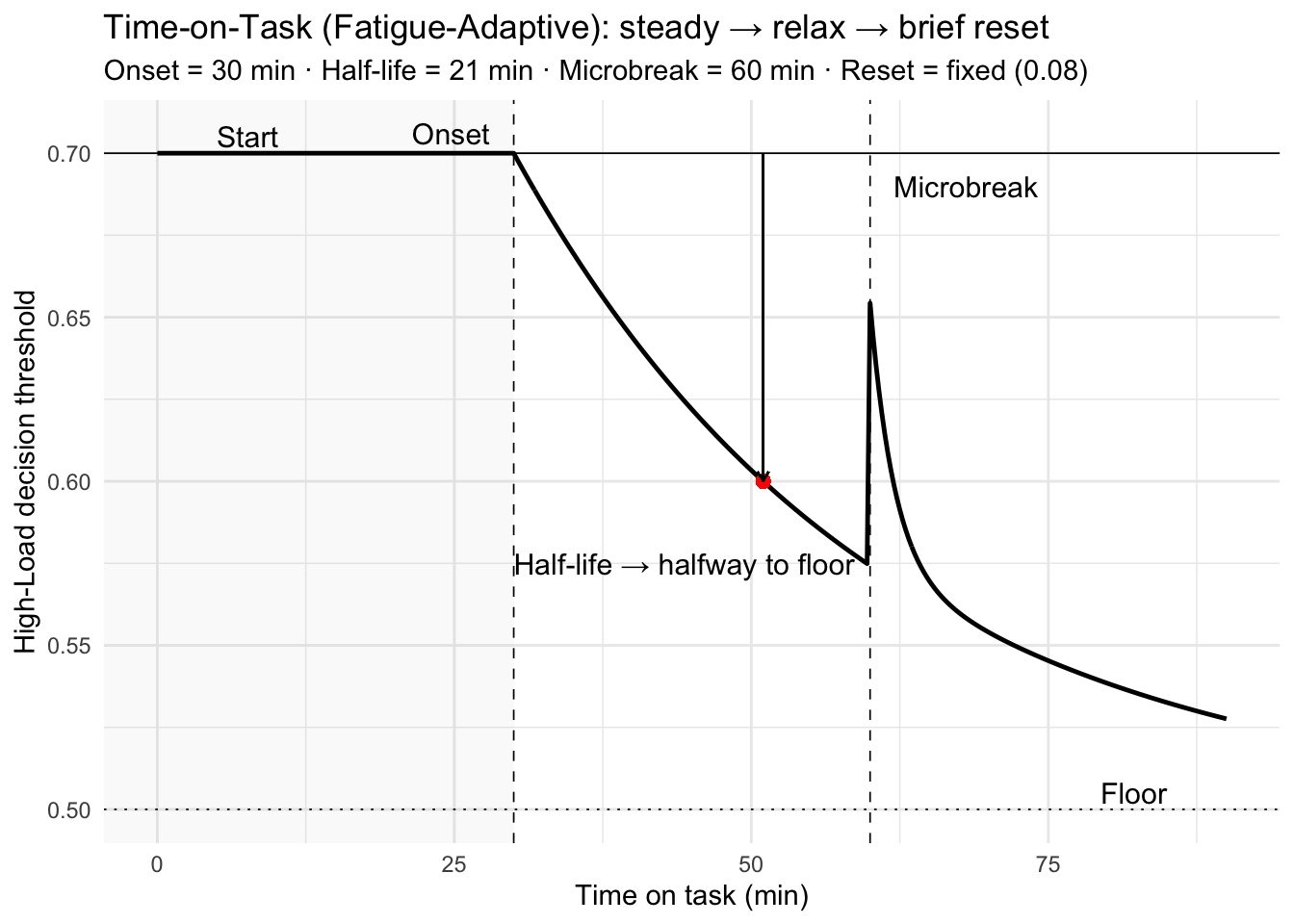

How to read this policy

- Pre-onset (t < 30 min): the decision threshold stays at 0.70.

- Post-onset: the threshold relaxes exponentially toward the floor 0.50—i.e., the system becomes more tolerant of High-Load detections as vigilance degrades.

- Microbreak (t = 60 min): a small reset tightens the threshold, then decays over ~2 min. (Demo uses a fixed, modest reset.)

- Half-life: at t = onset + half-life = 30 + 21 = 51 min, the threshold sits halfway between 0.70 and 0.50.

- Design intent: this is a controller on the criterion—transparent, auditable, and tunable per surgeon/task; it does not assume the evidence itself drifts.

Why a policy like this? Vigilance typically declines with time-on-task while perceived workload rises; brief microbreaks improve comfort and focus without harming flow. Pupil/HRV often show fast, partial recovery over 1–3 minutes. A time-scheduled, small criterion reset captures these operational realities without heavy modeling.

Good defaults (to be tuned):

onset_min20–30 min;fatigue_half_life_min15–30 min; reset proportional to observed pre/post microbreak recovery, with decay 1–3 min.References.

Warm, Parasuraman & Matthews (2008). Vigilance requires hard mental work and is stressful. Human Factors, 50(3), 433–441. DOI: 10.1518/001872008X312152

Dorion & Darveau (2013). Do micropauses prevent surgeon’s fatigue and loss of accuracy? Ann Surg, 257(2), 256–259. DOI: 10.1097/SLA.0b013e31825efe87

Park et al. (2017). Intraoperative “microbreaks” with exercises reduce surgeons’ musculoskeletal injuries. J Surg Res, 211, 24–31. DOI: 10.1016/j.jss.2016.11.017

Hallbeck et al. (2017). The impact of intraoperative microbreaks… Applied Ergonomics, 60, 334–341. DOI: 10.1016/j.apergo.2016.12.006

Luger et al. (2023). One-minute rest breaks mitigate healthcare worker stress. JMIR, 25, e44804. DOI: 10.2196/44804

Unsworth & Robison (2018). Tracking arousal with pupillometry. Cogn Affect Behav Neurosci, 18, 638–664. DOI: 10.3758/s13415-018-0604-8

Why thresholds must adapt

Switch between policies on the same stream. Notice how the same physiologic trace produces different coaching behavior. Policy is a choice, and it should be teachable.

Production Dashboard

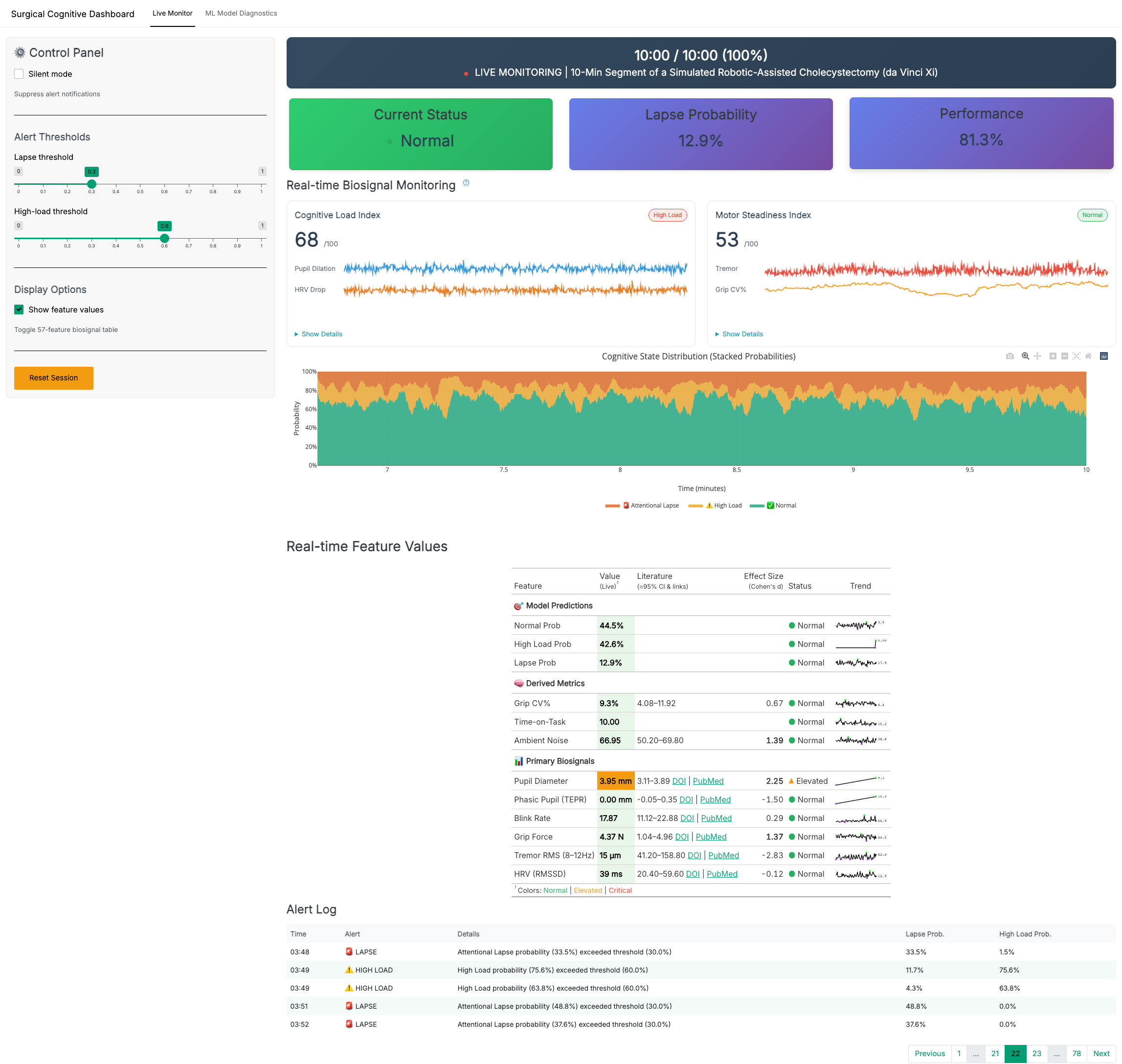

Live Monitor Overview

Component Details — Zoomable Gallery

Click any image to zoom and navigate with arrow keys.

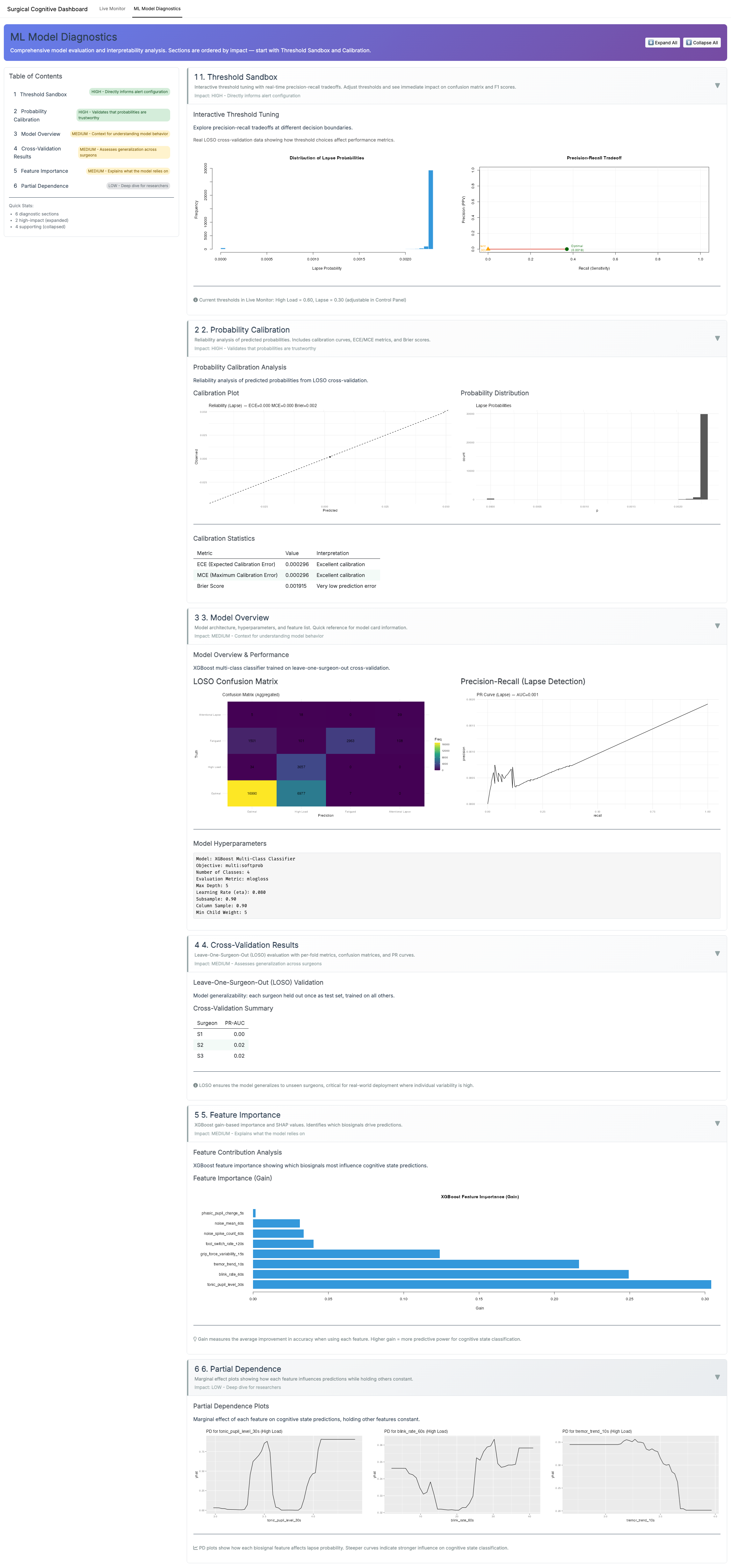

ML Diagnostics Overview

ML Diagnostics Details — Zoomable Gallery

Methods (what runs under the hood)

Data & provenance.

We use (a) synthetic sessions produced by the same code paths as the live app (seeded; 10–30 min, 50–100 Hz raw) and (b) replayable demo logs exported from the dashboard. All analyses in this page use time-stamped streams (UTC, ms).

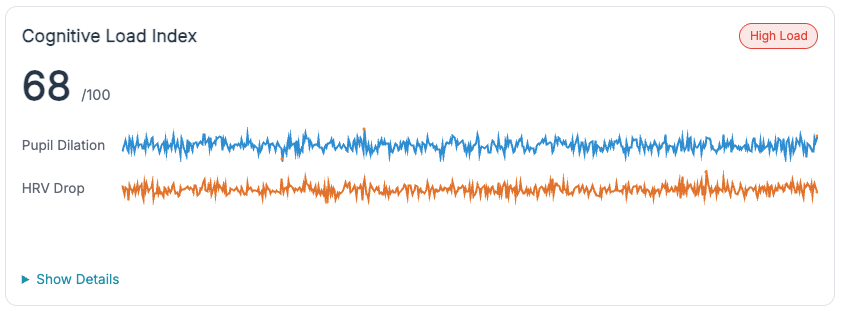

Signals & sampling.

Pupil diameter (mm; eye tracker), HRV from PPG/ECG (ms), grip force (N), tool-tip tremor (μm, 8–12 Hz band), blinks (blinks/min), ambient noise (dB). Signals are synchronized to a common clock and resampled to 10 Hz for feature windows.

Preprocessing.

- Pupil: blink interpolation → robust z-scoring within session; TEPR = event-locked delta (2–5 s).

- HRV: RMSSD computed over rolling 60 s (also 30/120 s for sensitivity); artifact correction via outlier trimming (5×MAD).

- Grip/tremor: 2–10 s rolling mean/SD; tremor via band-pass and RMS.

- Blink rate: rolling 60 s count; winsorized (1st/99th pct).

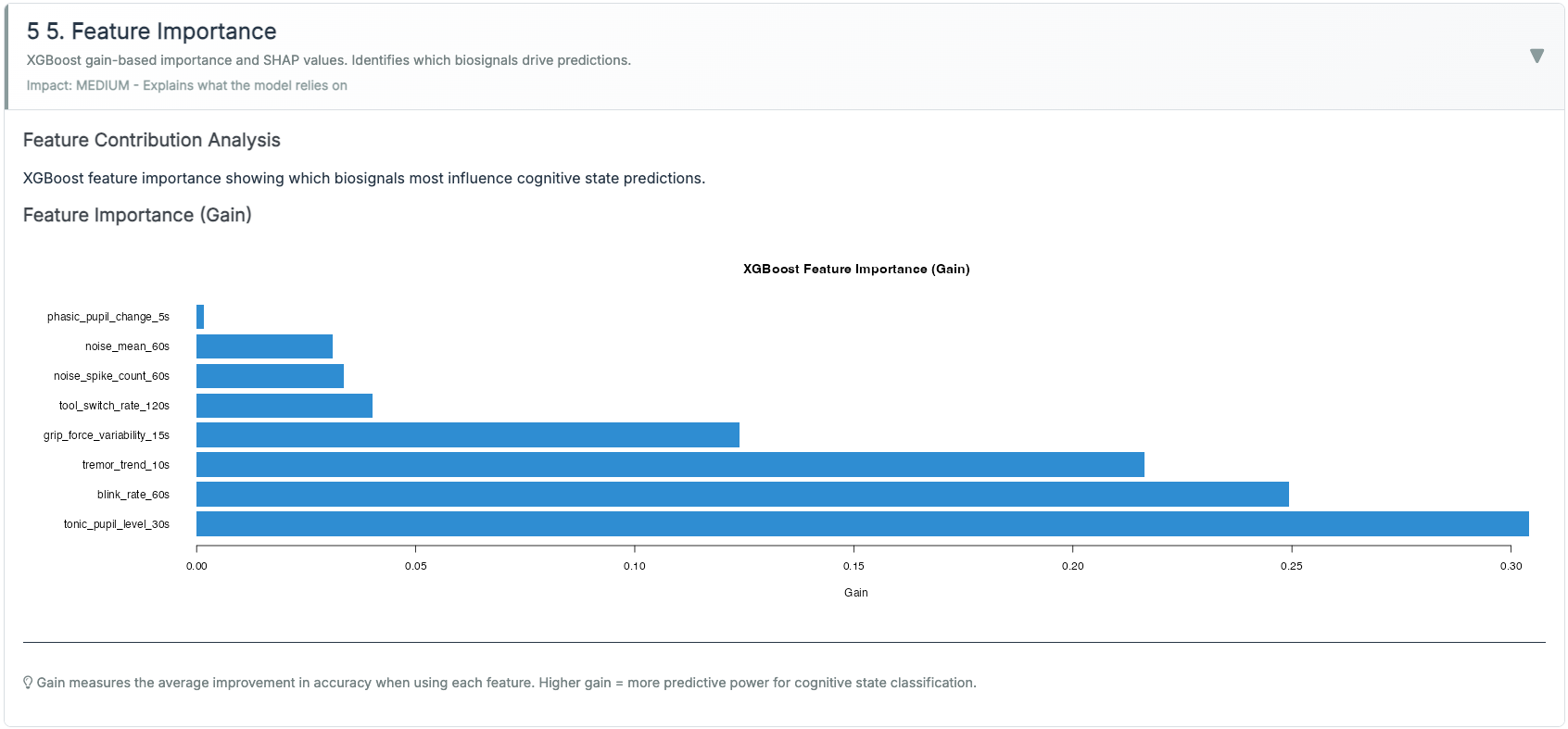

Features (examples; all z-scored within session).

- Pupil: tonic_30s_mean; tepr_5s_delta; pupil_cv_30s.

- HRV: rmssd_60s; rmssd_slope_60s; rmssd_ma_180s; rmssd×tepr interaction.

- Grip/Tremor: grip_mean_15s; grip_cv_15s; tremor_rms_10s; tremor_trend_60s.

- Ocular: blink_rate_60s; fixation_var_30s.

- Context: noise_db_30s.

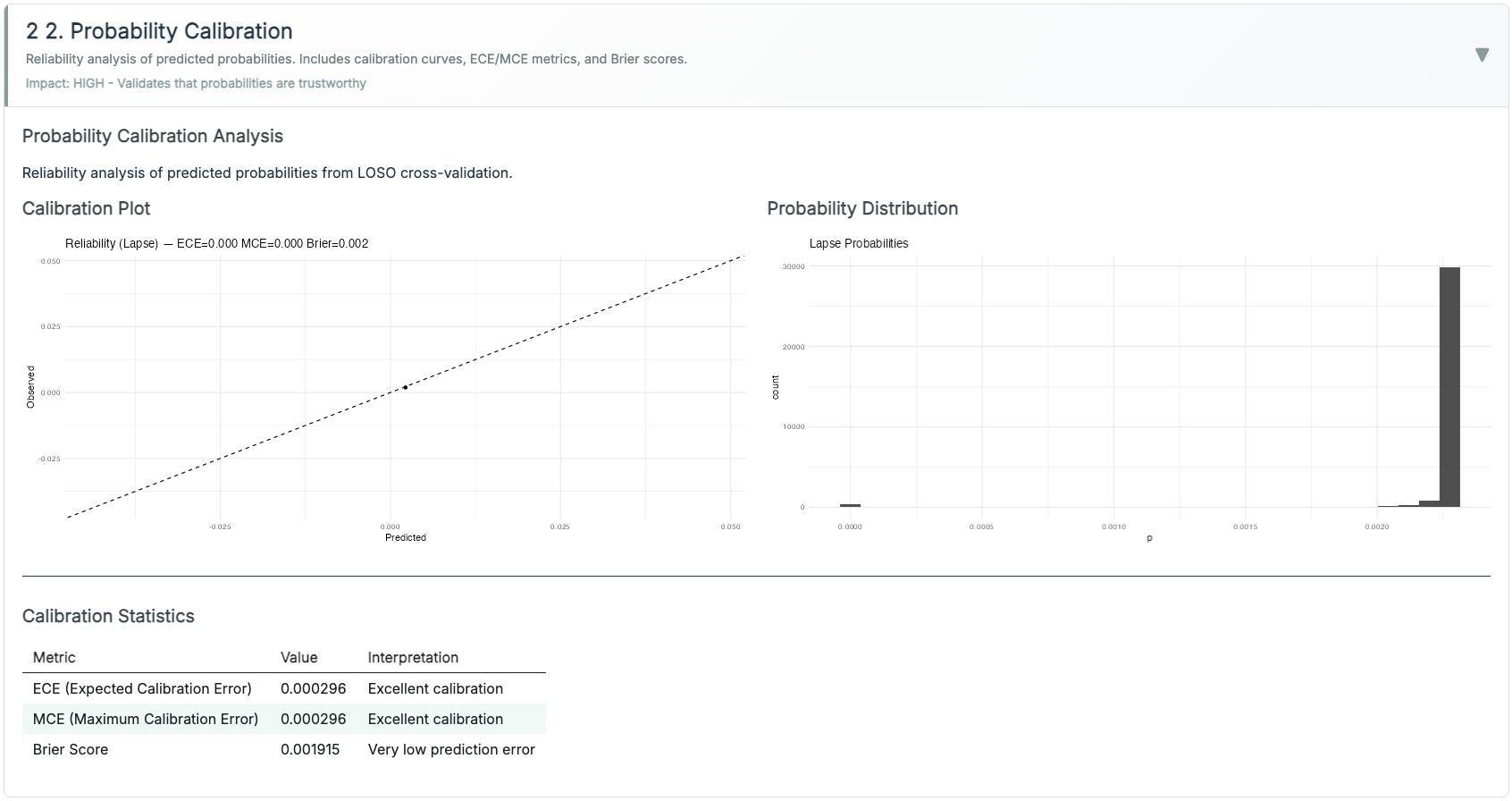

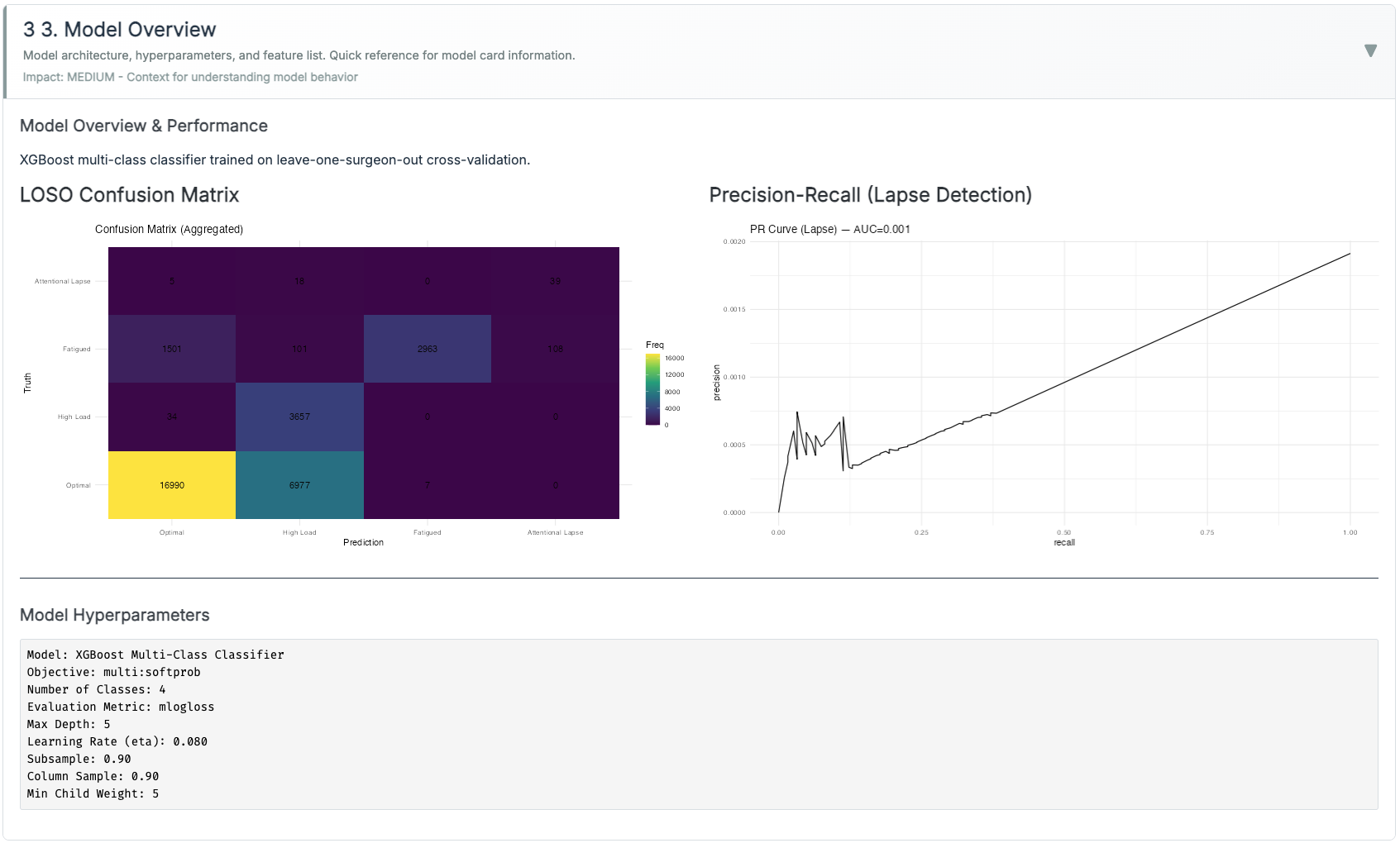

Modeling.

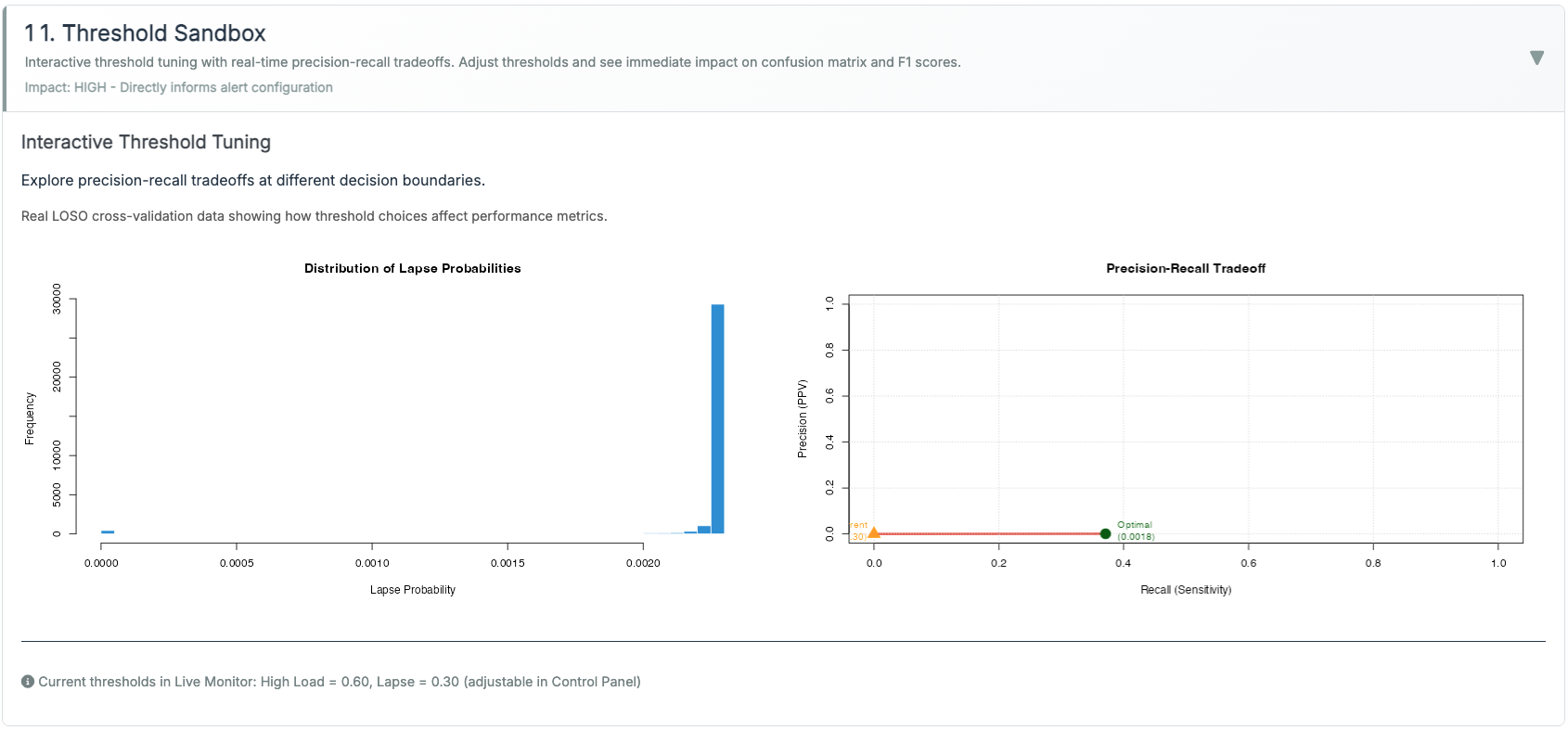

XGBoost (multi:softprob, 3 classes: Normal / High-Load / Lapse). Class imbalance handled via class weights (rare Lapse upweighted) and PR-AUC monitoring. Lapse probability is calibrated with Platt scaling (logistic on validation folds). We report ECE and Brier.

Threshold policies (runtime).

- Adaptive Gain (Inverted-U): keeps evidence within an optimal band.

- Dual-Criterion (SDT) with hysteresis: two criteria + enter/exit guards.

- Time-on-Task (Fatigue): hold → relax with half-life; microbreak gives a decaying reset.

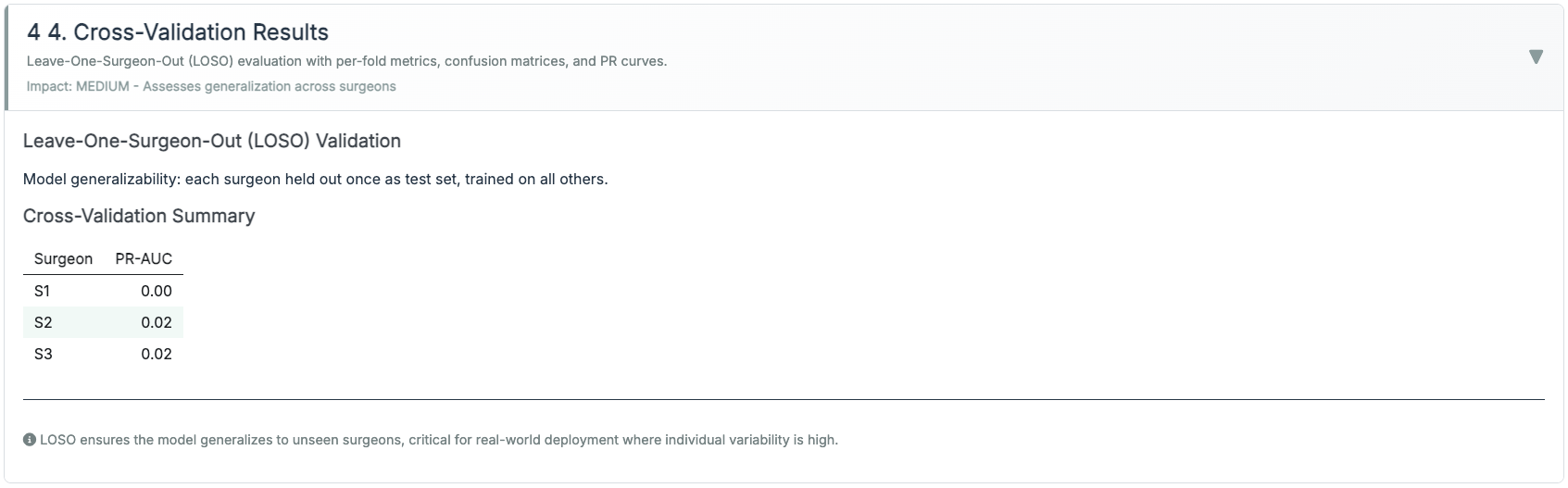

Validation.

Leave-One-Surgeon-Out (LOSO) where available; otherwise session-level 5× CV with subject leakage prevented. Hyperparameters chosen by Bayesian search, then frozen.

Reproducibility.

Seeds fixed; all plots are generated by scripts under scripts/90_make_gallery.R so the case study visuals match the live app implementation.

Methods & results that matter (ops KPIs)

We track outcomes that training leaders can act on:

- High-load minutes per hour (↓ is better). Target ≥20–30% reduction after policy tuning.

- Recovery latency after alerts/microbreaks (↓). Time for HRV/tremor to normalize; aim for <120 s median.

- Alarm burden & acceptance (↘ nuisance, ↗ adherence). Alerts/hour, % acknowledged, % acted upon.

- Stability (↗). Fewer state flips near thresholds with hysteresis vs naive criteria.

- Time-to-proficiency (↘). Sessions to hit competency rubric milestones.

Analytics: per-session trend lines + bootstrapped CIs; PR-AUC for Lapse, calibration error (ECE), and threshold-level lift curves for explainability.

Live clinical-style table with literature ranges

Product vs. Lab — how they fit

The Lab teaches the concepts and lets instructors tune policy; the Dashboard runs that policy live with guardrails and explains why an alert fired.

| Product vs. Lab — how they fit | ||

|---|---|---|

| Operational app (left) vs. pedagogy sandbox (right) | ||

| Aspect | Production Dashboard | Training Lab |

| Purpose | Real-time monitoring & decision support | Theory exploration & threshold policy tuning |

| Audience | Clinicians, safety officers | Educators, researchers |

| Features | Live gt table, tuned alerts, evidence fusion (pupil + HRV + grip/tremor) | Three paradigms (Adaptive Gain, SDT+hysteresis, Time-on-Task) |

| Latency | < 1 s UI; heavier data load | Instant |

| Interactivity | Threshold policies runnable; microbreak logging | Side-by-side policy comparisons; sliders |

| Calibration | XGBoost + Platt scaling; reliability curve (ECE/Brier) | Not model-based; exports policy defaults |

| Status | Stable, no extra wearables UI | Experimental, fast iteration |

| Note. The Lab defines pedagogy & defaults; the Dashboard executes with calibrated probabilities and alert hygiene. | ||

From SST to the OR — what transferred

- Alert hygiene over cleverness. A good model with bad alerting is a bad product. We optimize nuisance rate and edge-chatter, not just AUC.

- Interpretability on contact. “High-Load confirmed (TEPR↑ + RMSSD↓)” is actionable; a confusion matrix mid-case is not.

- Friction kills adoption. Every cable/cap/password taxes attention. That’s why this stays zero-headgear with <60-second setup.

Deployment & Privacy

Runs as a Docker image or on ShinyApps; can embed in training portals with a single <iframe>. By default, surgeon biometrics are ephemeral: we retain only de-identified aggregates (for QA/research) with explicit consent. The system is assistive, not autonomous—the clinician remains in the loop, with visible rationale for alerts.

What’s next — tailored pedagogy

- Estimate the trainee’s arousal band. Use early sessions to fit a simple sweet-spot width (from TEPR/HRV vs performance), then personalize alert sensitivity.

- Phase-aware coaching. Different thresholds for docking, dissection, suturing; tighten only where error costs are high.

- Post-hoc debriefs. Auto-compile moments of sustained overload (≥30 s) with linked video, instructor notes, and the coaching action taken.

- Prospective classroom studies. Randomize microbreak timing/pacing advice and measure effects on recovery latency and learning curves.